2023. 6. 23. 10:28ㆍPython

vscode 기본

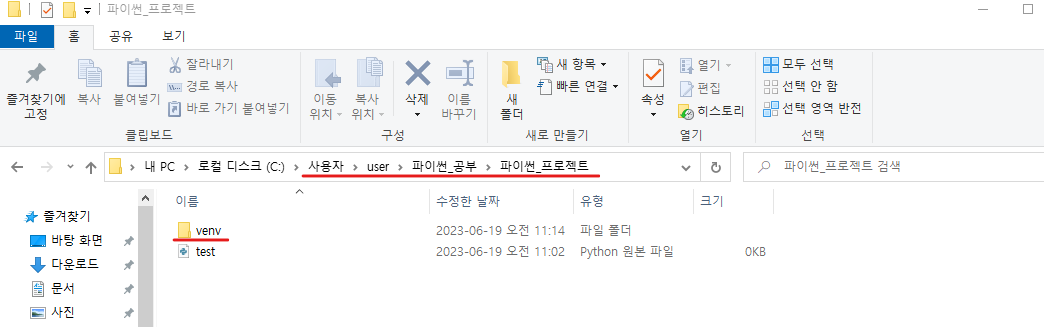

파이썬_프로젝트 폴더 하나 생성하여 vscode에서 열기

파일 생성 test.py

유용한 확장팩 설치

Snippet

Trailing Spaces

Prettier - Code formatter

Tabnine AI Autocomplete for Javascript, Python, Typesc

Todo Tree

유용한 기능 setting

저장할 때 띄워쓰기 같은 것들 제대로 변환하여 저장해주는 기능

리프레쉬 기능

ctrl + p

검색창 뜨면

'>' 치고 명령어(? 치면 vscode 환경 설정 가능

developer restart ~ : vscode 멈추거나 실행 잘 안 될 때 사용

터미널 켜기

제목줄의 Terminal > new Terminal 클릭

+ 아이콘 눌러서 git bash 클릭

가상 환경 설정

터미널에 아래 명령어 치기

python -m venv venv(가상 환경 이름)

폴더 경로로 이동하면 가상 환경 폴더 생성된 것 확인 가능!

가상 환경 진입 명령어

source ./venv/Scripts/activate

가상 환경에 진입 성공하면 아래 이미지처럼 가상환경이름이 뜸!

*참고 가상 환경에서 벗어나는 명령어

deactivate

클래스 생성 및 객체 생성 방법

#클래스 생성

class TestClass:

var1 = 1

#객체 생성 ->의 의미 : None - 함수 아무것도 반환 x, int - 정수형을 반환

# __init__ : java에서의 생성자

def __init__(self) -> None:

pass #없으면 error

def func1(self): #self 매개변수로 넣어주면 java의 this와 동일 기능

self.var1 = 2

return self.var1

리뷰 예측 프로젝트

새 파일 생성

review_project.py

colab에서 리뷰 예측한 코드 차례대로 가져오기

*참고

매번 프로젝트 할 때마다 import 및 install 해야 함

터미널에서 명령어 실행

pip install konlpy

import 코드 가져와서 review_project.py 처음에 붙여 넣은 후

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import re

import urllib.request

from konlpy.tag import Okt

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.models import load_model

from keras.preprocessing.text import tokenizer_from_json

print(1)

class ReviewPredict():

review_model

def __init__(self) -> None:

pass터미널에서 명령어 실행하여 라이브러리 설치

pip install pandas numpy matplotlib

pip install tensorflow

터미널에서 명령어 실행하여 1 출력되는지 확인

python review_predict.py

만약 아래와 같은 오류 뜨는 경우

맨 아래쪽 url로 접속

아키텍처 다운로드 후 명령어 다시 실행

*tip

vscode에서 해당 변수명 모두 바꾸고 싶을 때 f2 누르고 변수명 설정하면 동일 변수명 한번에 바뀜.

데이터 전처리 작업

#데이터 전처리

class ReviewPredict():

data = pd.DataFrame

loaded_model = load_model("best_model.h5")

#생성자

def __init__(self, data:pd.DataFrame, model_name) -> None:

self.data = data

# self.loaded_model = load_model(model_name)

def process_data(self): #type: ignore

tmp_data = self.data.dropna(how = 'any') #null 값이 존재하는 행 제거

# 한글과 공백을 제외하고 모두 제거, \s : 공백(빈 칸)의미

tmp_data['document'] = tmp_data['document'].str.replace("[^ㄱ-ㅎㅏ-ㅣ가-힣\s]", "")

#공백으로 시작하는 데이터를 빈 값으로

tmp_data['document'] = tmp_data['document'].str.replace('^ +', "")

#빈 값을 null 값으로

tmp_data['document'].replace('', np.nan, inplace=True)

return tmp_data

def sentiment_predict(self, new_sentence):

okt = Okt()

tokenizer = Tokenizer()

stopwords = ['의','가','이','은','들',

'는','좀','잘','걍','과','도',

'를','으로','자','에','와','한','하다']

max_len = 30

new_sentence = re.sub(r'[^ㄱ-ㅎㅏ-ㅣ가-힣 ]', '', new_sentence)

new_sentence = okt.morphs(new_sentence, stem=True) #토큰화

new_sentence = [word for word in new_sentence if not word in stopwords] #불용어 제거

encoded = tokenizer.texts_to_sequences([new_sentence]) #정수 인코딩

pad_new = pad_sequences(encoded, maxlen = max_len) #패딩

score = float(self.loaded_model.predict(pad_new)) #예측

if(score > 0.5):

print("{:.2f}% 확률로 긍정 리뷰입니다.\n".format(score * 100))

else :

print("{:.2f}% 확률로 부정 리뷰입니다.\n".format((1-score) *100 ))모델 불러오기

best_model.h5 파일 프로젝트와 같은 경로에 추가

app.py 파일 생성

from flask import Flask

app = Flask(__name__)

@app.route('/test1')

def test():

return "Hello"

if __name__ == '__main__':

app.run(debug=True, port=5000)flask 설치

터미널에 명령어

pip install Flask

설치 후

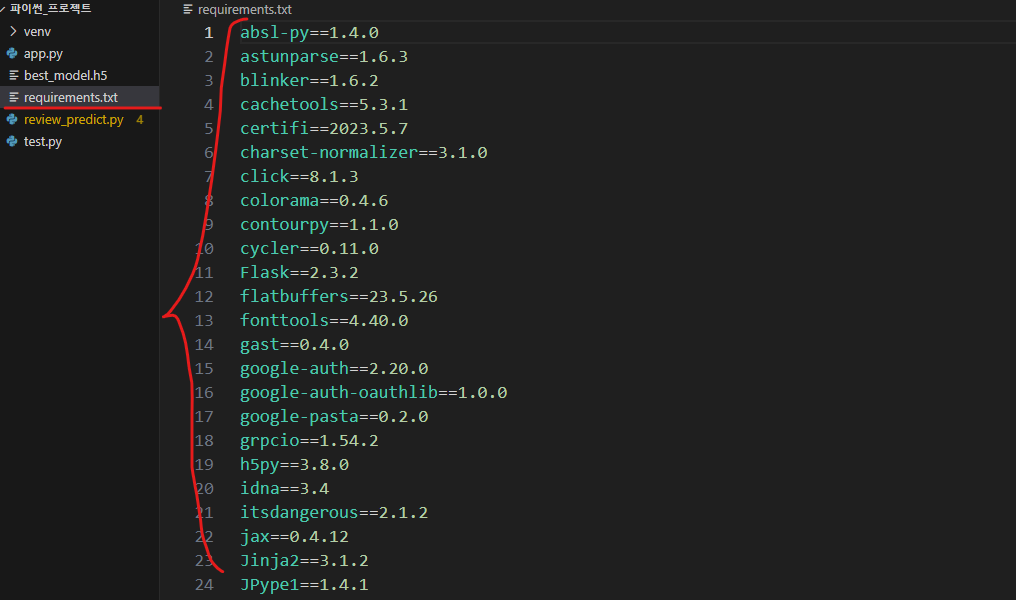

pip freeze > requirements.txt 명령어

> 새로운 파일 하나 생성됨.

> 설치된 패키지 목록에 대한 정보 만드는 것

다른 프로젝트 작업 시 requirements.txt 안에 있는 패키지들을 모두 설치하기 위해서는 아래 명령어를 입력

pip install -r requirements.txt

명령어 치면 이전에 했던 install 작업 안 해도 한번에 설치 가능

- flask에서 get method를 통해 querystring 받아오기

from flask import Flask, request

import review_predict as rp

import os

os.environ['JAVA_HOME'] = r'C:\Program Files\Java\jdk-17\bin\server'

app = Flask(__name__)

@app.route("/test")

def test():

sentence = request.args.get("sentence")

return sentence

@app.route("/predict")

def predict_review_good_or_bad():

sentence = request.args.get("sentence")

if sentence == None: return "sentence를 입력해주세요." #예외 처리 문장 입력하라는 return

reviewPredict = rp.ReviewPredict()

result = reviewPredict.sentiment_predict(sentence)

return result

if __name__ == '__main__':

app.run(debug=True, port=5000)

jvm 오류 발생 시

환경 변수 설정 아래 경로 복사

시스템 속성에서 환경 변수 설정

시스템 변수에서 새로 만들기해서 경로 추가 후 vscode 재실행

위 설정해도 안 될 경우 아래 코드 추가

import os

os.environ['JAVA_HOME'] = r'C:\Program Files\Java\jdk-17\bin\server'

tokenizer.json 파일 vscode에 추가

json 확장팩 설치

설치 후 설정창에서 json-zain 검색 후 아래 항목 체크

코드 수정

#데이터 전처리

class ReviewPredict():

data:pd.DataFrame

loaded_model = load_model("best_model.h5")

tokenizer = None

#생성자

def __init__(self) -> None:

with open('tokenizer.json') as f:

data = json.load(f)

self.tokenizer = tokenizer_from_json(data)

pass

# self.data = data

# self.loaded_model = load_model(model_name)

def process_data(self): #type: ignore

tmp_data = self.data.dropna(how = 'any') #null 값이 존재하는 행 제거

# 한글과 공백을 제외하고 모두 제거, \s : 공백(빈 칸)의미

tmp_data['document'] = tmp_data['document'].str.replace("[^ㄱ-ㅎㅏ-ㅣ가-힣\s]", "")

#공백으로 시작하는 데이터를 빈 값으로

tmp_data['document'] = tmp_data['document'].str.replace('^ +', "")

#빈 값을 null 값으로

tmp_data['document'].replace('', np.nan, inplace=True)

return tmp_data

def sentiment_predict(self, new_sentence):

okt = Okt()

# tokenizer = Tokenizer()

stopwords = ['의','가','이','은','들',

'는','좀','잘','걍','과','도',

'를','으로','자','에','와','한','하다']

max_len = 30

new_sentence = re.sub(r'[^ㄱ-ㅎㅏ-ㅣ가-힣 ]', '', new_sentence)

new_sentence = okt.morphs(new_sentence, stem=True) #토큰화

new_sentence = [word for word in new_sentence if not word in stopwords] #불용어 제거

encoded = self.tokenizer.texts_to_sequences([new_sentence]) #정수 인코딩

pad_new = pad_sequences(encoded, maxlen = max_len) #패딩

score = float(self.loaded_model.predict(pad_new)) #예측

if(score > 0.5):

return "{:.2f}% 확률로 긍정 리뷰입니다.\n".format(score * 100)

else :

return "{:.2f}% 확률로 부정 리뷰입니다.\n".format((1-score) *100 )git bash로 들어와서 터미널에

python app.py 실행

해당 포트로 접속

url+/경로?sentence=긍/부정 확인할 문장치면 아래처럼 나옴!

'Python' 카테고리의 다른 글

| 데이터 분석 (4) 컬럼을 활용한 데이터 확인 (0) | 2023.07.07 |

|---|---|

| 파이썬 자바로 가져오는 법 (0) | 2023.06.19 |

| 대시보드 시각화 - vscode로 html 작성 (0) | 2023.06.12 |

| 웹데이터 크롤링하여 csv 생성하기 (0) | 2023.06.07 |

| 웹 크롤링 (4) 스타벅스 매장 정보 크롤링 (0) | 2023.06.01 |